Artificial Intelligence (AI) has become a buzzword in various sectors, and nonprofits are no exception. As organizations dedicated to social good, nonprofits are increasingly leveraging AI to enhance their operations, improve service delivery, and make data-driven decisions. However, with great power comes great responsibility.

The integration of AI into nonprofit work raises a host of ethical questions that must be addressed to ensure that these technologies serve their intended purpose without causing harm. Understanding AI ethics is not merely an academic exercise; it is a practical necessity for nonprofits striving to maintain their integrity and mission. The ethical landscape surrounding AI is complex and multifaceted.

Nonprofits often operate in sensitive areas such as healthcare, education, and social justice, where the stakes are high. The decisions made by AI systems can significantly impact vulnerable populations, making it imperative for organizations to navigate this terrain with care. By prioritizing ethical considerations, nonprofits can harness the potential of AI while safeguarding the values that underpin their work.

This article will explore the ethical implications of AI for nonprofits, focusing on transparency, accountability, bias, data privacy, governance, and collaboration with stakeholders.

Understanding the Ethical Implications of AI for Nonprofits

At its core, the ethical implications of AI for nonprofits revolve around the potential consequences of deploying these technologies in real-world scenarios. Nonprofits often rely on data to inform their strategies and interventions, but the algorithms that process this data can introduce unforeseen biases and inaccuracies. For instance, an AI system designed to allocate resources might inadvertently favor certain demographics over others, leading to unequal access to services.

This is particularly concerning in sectors like healthcare, where biased algorithms can exacerbate existing disparities. Moreover, the use of AI can raise questions about autonomy and agency. When organizations rely heavily on automated systems to make decisions, there is a risk of undermining human judgment and expertise.

Nonprofits must grapple with the balance between leveraging technology for efficiency and ensuring that human values remain at the forefront of their mission. This requires a thoughtful examination of how AI tools are developed and implemented, as well as a commitment to ongoing evaluation and adjustment based on ethical considerations.

Ensuring Transparency and Accountability in AI Use

The “Do No Harm” approach is as good as way as any to handle AI

Transparency is a cornerstone of ethical AI use, especially for nonprofits that operate under a public trust. Stakeholders—including donors, beneficiaries, and community members—have a right to understand how AI systems are being used and the rationale behind their decisions. This means that nonprofits must be willing to share information about the algorithms they employ, the data they collect, and the outcomes they aim to achieve.

By fostering an environment of openness, organizations can build trust with their constituents and demonstrate their commitment to ethical practices. Accountability goes hand in hand with transparency. Nonprofits must establish clear lines of responsibility for the use of AI within their organizations.

This includes identifying who is responsible for overseeing AI initiatives, as well as creating mechanisms for addressing any negative consequences that may arise from their use. For example, if an AI system leads to an unintended outcome—such as misallocating resources—there should be a process in place for rectifying the situation and learning from the experience. By holding themselves accountable, nonprofits can reinforce their ethical commitments and ensure that they remain true to their mission.

Addressing Bias and Fairness in AI Algorithms

Bias in AI algorithms is a pressing concern that nonprofits must confront head-on. Algorithms are only as good as the data they are trained on, and if that data reflects historical inequalities or prejudices, the resulting AI systems will perpetuate those biases. For instance, if a nonprofit uses an AI tool to screen applicants for assistance but relies on biased historical data, it may inadvertently disadvantage certain groups.

This not only undermines the organization’s mission but also risks harming the very populations it seeks to help. To combat bias, nonprofits should prioritize fairness in their AI initiatives. This involves conducting thorough audits of algorithms to identify potential biases and implementing strategies to mitigate them.

Techniques such as diversifying training data, employing fairness-aware algorithms, and involving diverse teams in the development process can help create more equitable outcomes. Additionally, nonprofits should engage with affected communities to understand their perspectives and experiences, ensuring that their voices are heard in the design and implementation of AI systems.

Safeguarding Data Privacy and Security in AI Applications

Data privacy is another critical aspect of AI ethics that nonprofits must navigate carefully. Many organizations collect sensitive information about individuals they serve, and the use of AI can amplify concerns about data security and privacy breaches. Nonprofits have a moral obligation to protect this information from unauthorized access or misuse.

This means implementing robust security measures and adhering to best practices for data management. Moreover, nonprofits should be transparent about how they collect, store, and use data in conjunction with AI technologies.

Beneficiaries should be informed about what data is being collected and how it will be used, allowing them to make informed choices about their participation.

By prioritizing data privacy and security, nonprofits can foster trust with their constituents while also complying with legal regulations such as GDPR or HIPAA.

Promoting Responsible and Ethical AI Governance

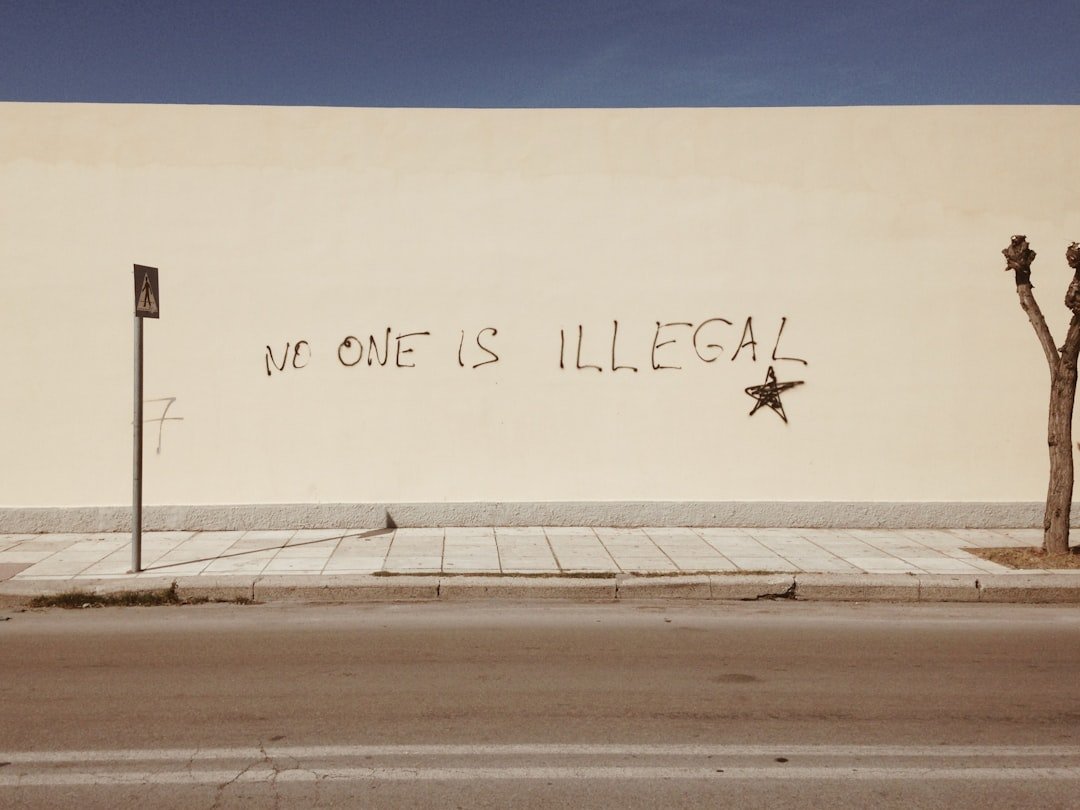

Sometimes a real image is far more powerful than an AI-generated image

Establishing a framework for responsible AI governance is essential for nonprofits looking to navigate the ethical challenges associated with these technologies. This involves creating policies and guidelines that outline how AI will be used within the organization while ensuring alignment with its mission and values. A well-defined governance structure can help organizations make informed decisions about when and how to deploy AI tools.

In addition to internal governance, nonprofits should also advocate for broader ethical standards within the sector. By collaborating with other organizations and stakeholders, they can contribute to the development of industry-wide best practices that promote responsible AI use. This collective effort can help create a culture of accountability and ethical awareness that extends beyond individual organizations.

Collaborating with Stakeholders and Experts in AI Ethics

Collaboration is key when it comes to addressing the ethical challenges posed by AI in the nonprofit sector. Engaging with stakeholders—including beneficiaries, community members, policymakers, and technology experts—can provide valuable insights into the potential impacts of AI initiatives. By fostering open dialogue and soliciting feedback from diverse perspectives, nonprofits can better understand the ethical implications of their work.

Additionally, partnering with experts in AI ethics can enhance an organization’s capacity to navigate complex issues related to technology use. These experts can provide guidance on best practices for algorithm development, bias mitigation strategies, and data privacy considerations. By leveraging external expertise, nonprofits can strengthen their ethical frameworks and ensure that they are making informed decisions about their use of AI.

Navigating the Ethical Challenges of AI for Nonprofits

As nonprofits increasingly embrace AI technologies, they must remain vigilant about the ethical implications of their use. By prioritizing transparency, accountability, bias mitigation, data privacy, responsible governance, and collaboration with stakeholders, organizations can navigate the complex landscape of AI ethics effectively. The journey may be fraught with challenges, but by committing to ethical principles, nonprofits can harness the power of AI to further their missions while upholding the values that define their work.

In this rapidly evolving technological landscape, it is essential for nonprofits to stay informed about emerging trends in AI ethics and adapt their practices accordingly. The stakes are high; the decisions made today will shape the future of nonprofit work in profound ways. By taking a proactive approach to ethical considerations in AI use, organizations can not only enhance their effectiveness but also contribute to a more equitable society where technology serves as a force for good rather than a source of harm.